Building a new home server

See you next year for when I get tired of this setupposted 2024-12-21

Warning

This post was made a long time ago. The information inside of it may be outdated, and the writing may not accurately reflect my current self.

A few days ago, I got an email from my server running Proxmox:

well that's not good

— NotNite (@notnite.com) Dec 8, 2024 at 3:31 PM

[image or embed]

One of the drives was showing signs of failure. This was bad for me, as this drive was part of a RAID group that I mistakenly set up to be RAID 0 several years ago. I realized it was RAID 0 after I got this email. Whoops!

I was wanting to build a new server + NAS combination for a while, so this seemed like a good opportunity to do it, but with some extra time pressure spice on top of it. Let’s talk about it!

The NotNet server saga

The first server I ever owned was a hand-me-down from my dad’s workplace. It was an old Dell OptiPlex, and it could run a web server and a really limited Minecraft world before running out of resources. Later that year, I ended up buying a Hetzner dedicated server, which would host most of my projects. I strived for something more local, though - some place that I could experiment with, and more importantly get better ping to. Enter one Christmas day, where I was able to build my own home server. This is the one that this website was hosted on, up until today!

Eventually, I started using NixOS instead of Debian for the LXC containers in Proxmox, and I quickly learned that it was a big mistake. This caused me lots of grief with bugs in the integration and a general feeling of instability - especially when there was practically no way to test service changes locally. I also encountered issues where my Mastodon instance couldn’t update to patch a CVE because nixpkgs didn’t support that version of Yarn lockfile at the time.

I ended up buying a second Hetzner dedicated server, installed Rocky Linux on it, and started using Docker for everything. Instead of using Kubernetes or Docker Swarm, I just use some Docker Compose files with a bit of shell scripting to glue it all together + do backups every day. I don’t need that complexity, and this is very easy to test locally. I’m still in the progress of migrating everything off of the first server 🥲.

I chose Docker over Podman because Docker Compose is battle tested and podman-compose seemed iffy. I would prefer the ability to make rootless containers, but I really wanted to be able to use YAML to declare stuff instead of whatever the hell a Quadlet is.

The layout of the files is like this:

composes/

(stack name)/

compose.yaml

(optional config files, like a Caddyfile)

scripts/

(misc shell scripts for server administration)

update.sh

backup.sh

volume-exclude.txt

Every day, a systemd timer runs backup.sh, which backs up the folders in the volumes directory using BorgBackup. Every 30 minutes, another systemd timer runs update.sh, which pulls the latest changes from a Git repository and pulls/rebuilds the latest versions of every container.

This system has a lot of flaws, but it’s the one that works best for me and my use cases. While it does make running services for other people a lot more inconvenient, I wanted to stray away from doing that anyway, and keep my services to only myself. Things that I have to maintain that don’t impact me stress me out, and I don’t need that in my life.

I ended up taking that repository, modified the paths/names on it, and used it for the new server. Now, let’s get to setting it up!

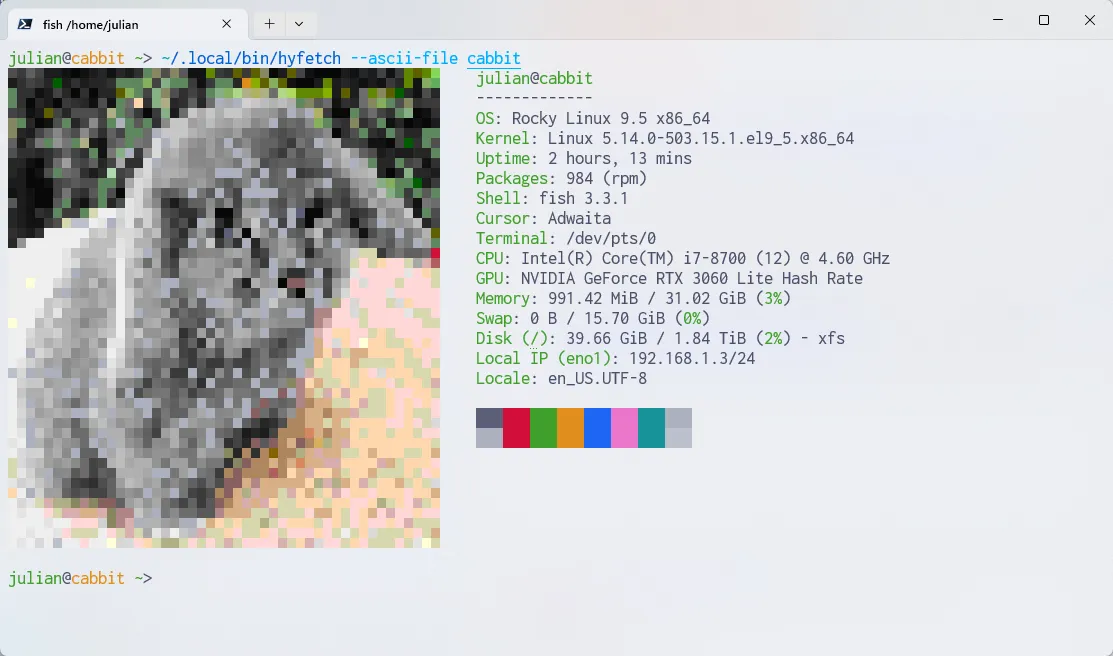

OS & storage

The machine is an old gaming PC of mine, with an i7-8700 with 32 GB of RAM. It also has an RTX 3060 in it, because it used to be a server for AI workloads, but I no longer have interest in the AI sector of technology in the slightest after seeing how terrible it has become. (That’s a blog post for another day.)

As with my latest server, I chose Rocky Linux. I have increasingly warmed up to RHEL-land and dnf (especially after using Fedora on my laptop), and a friend suggested it to me, so I thought why not. I’m going with the hostname “cabbit” - a portmanteau of “cat” and “rabbit” - because I was thinking about cabbits while flashing the installation ISO.

The rootfs will be a 2 TB SSD from a previous project. After installation, I installed Docker and set up the infrastructure user. There was a problem, though - I noticed that the installer only allocated 70 GB for the rootfs, while the rest of the almost 2 TB went to the /home partition! I ended up finding a GitHub Gist on how to delete the home partition and resize the root, so I did that (with some help from the comments when I encountered an error message).

After all that, I just had to wait for the drives to arrive. We’re going with 4x14 TB hard drives - WD Reds - in a ZFS cluster. I picked ZFS because it seemed the most stable and I had very little experience with it, which I wanted to sharpen my skills with. I picked one up from the local Micro Center and ordered the other three online, so the chances of having multiple from the same batch was less.

After Newegg delayed my drive through the weekend, I got the drives installed and set up a ZFS pool & filesystem with raidz2. I chose the name “poolian” because it’s funny.

I went with the following structure on disk:

volumes/

(various folders for Docker bind mounting)

media/

(shared folder for family files)

samba/

(per-user samba shares)

I set up a Samba share for me and my dad, and then started working on the first service to be deployed (a Jellyfin instance).

Deploying Jellyfin over Tailscale

I love Tailscale a lot, but never really used a lot of its potential. I wanted to try serving some internal services in my home (Jellyfin, Home Assistant, etc.) through it. I ended up finding caddy-tailscale, and it seems like a perfect fit - I can create ephemeral nodes that proxy to other services!

My composes follow the prefix of type-service, so web-caddy for websites. For these internal things, I adopted the internal type, so internal-caddy and internal-jellyfin and so on. After stealing a Dockerfile from a GitHub issue, configuring the Caddyfile was easy:

{

email redacted

tailscale {

ephemeral

}

}

:80 {

bind tailscale/jellyfin

reverse_proxy jellyfin:8096

}

Then, I just had to make an internal-caddy Docker network and put the Jellyfin container into it. I also exposed the port through Docker to the LAN, so devices like smart TVs don’t need a Tailscale connection.

As said before, this machine also has a GPU in it, so I was able to connect it to Jellyfin for hardware encoding. I already had the NVIDIA drivers installed, so I just had to install an extra package and run a command to set up Docker support, then edit the compose.yaml to use it. There’s a full tutorial on Jellyfin’s documentation site which does a better job at explaining it.

Moving the rest

I don’t want to have any downtime, so I copied my NGINX configs into Caddy 1:1 and copied the website directories over. The idea was that I’ll just change the port in my firewall, start Caddy, and it’ll reverse proxy to the old existing services until I can Dockerize them. I was very pleased with how easy and readable the Caddyfile config for this was!

I changed the destination address in my firewall, started the service, and… it just worked? Nice! Literally everything worked first try, which I’m very happy with.

I first moved Forgejo and the LDAP server that it uses. Moving the LDAP server was fine, but the export from the gitea dump command didn’t actually line up with either the paths on disk or in the Docker container, which was very confusing. I spun up a test instance of the Docker container and compared the filesystem to figure out where to put everything, and had to do some config file surgery for the data directory and missing fields that were only in the Docker container.

There’s a lot of stuff that wasn’t properly declared through Nix on the old server - one off services that don’t need to be running for long, or things that are more personal projects. Moving these was mostly easy, because not being bound by Nix makes it easy to do things the non-Nix way. I can just install and run Rust instead of needing to pop open a Nix shell or modify the environment. It’s simpler for quick hacks, and that’s the point.

Could I have declared them? Yes - at a cost. The services need to be reachable to build (= in a repository that’s either public or has access configured). It required me to write NixOS modules for everything. This just isn’t worth it for a personal Discord bot or an eightyeightthirty.one scraper node - these aren’t important enough with my time for me to Nix-ify it.

That’s my real gripe with Nix - it works when it works, but breaking out of that declarative box is so hard, especially with how running executables works with the interpreter. The ecosystem is designed intentionally to force you into doing everything the Nix way, for better and for worse, and the worse parts bit me far more than the better parts. I have a lot of respect for people who have the time to configure it, but I don’t want to be doing that for everything.

The results

Things… just work! I started drafting this blog post shortly before I built the machine, and it’s been around a week since as I type this paragraph. ZFS is eating a lot of memory, but it’s not a huge concern. There’s a few services I have yet to migrate which I’ll probably kill off:

- hast =), because running a paste server that anyone can use is a liability

- The Lounge, because I use Quassel now for IRC

- Some misc game servers that haven’t seen users in months

I’ll be taking a backup of everything that I care about and shutting it off for good sometime soon. Overall, I’m really happy with this move - server maintenance is a lot less stressful for me now. The storage will come in handy for my family, and I’ll probably be lending some storage space to some close friends with BorgBackup.

Onto worrying about migrating my Hetzner machines…